Apr 16, 2014

by

Joseph Cesario, Kai Jonas

Continued from Part 1.

Now that some initial points and clarifications have been offered, we can move to the meat of the argument. Direct replication is essential to science. What does it mean to replicate an effect? All effects require a set of contingencies to be in place. To replicate an effect is to set up those same contingencies that were present in the initial investigation and observe the same effect, whereas to fail to replicate an effect is to set up those same contingencies and fail to observe the same effect. Putting aside what we mean by "same effect" (i.e., directional consistency versus magnitude), we don't see any way in which people can reasonably disagree on this point. This is a general point true of all domains of scientific inquiry.

The real question becomes, how can we know what contingencies produced the effect in the original investigation? Or more specifically, how can we separate the important contingencies from the unimportant contingencies? There are innumerable contingencies present in a scientific investigation that are totally irrelevant to obtaining the effect: the brand of the light bulb in the room, the sock color of the experimenter, whether the participant got a haircut last Friday morning or Friday afternoon. Common sense can provide some guidance, but in the end the theory used to explain the effect specifies the necessary contingencies and, by omission, the unnecessary contingencies. Therefore, if one is operating under the wrong theory, one might think some contingencies are important when really they are unimportant, and more interestingly, one might miss some necessary contingencies because the theory did not mention them as being important.

Before providing an example, it might be useful to note that, as far as we can tell, no one has offered any criticism of the logic outlined above. Many sarcastic comments have been made along the lines of, "apparently we can never learn anything because of all these mysterious moderators." And it is true that the argument can be misused to defend poor research practices. But at core, there is no criticism about the basic point that contingencies are necessary for all effects and a theory establishes those contingencies.

Read more...

Apr 9, 2014

by

Joseph Cesario, Kai Jonas

We are probably thought of as "defenders" of priming effects and along with that comes the expectation that we will provide some convincing argument for why priming effects are real. We will do no such thing. The kinds of priming effects under consideration (priming of social categories which result in behavioral priming effects) is a field with relatively few direct replications1 and we therefore lack good estimates of the effect size of any specific effect. Judgments about the nature of such effects can only be made after thorough, systematic research, which will take some years still (assuming priming researchers change their research practices). And of course, we must be open to the possibility that further data will show any given effect to be small or non-existent.

One really important thing we could do to advance the field to that future ideal state is to stop calling everything priming. It appears now, especially with the introduction of the awful term "social priming," that any manipulation used by a social cognition researcher can be called priming and, if such a manipulation fails to have an effect, it is cheerfully linked to this nebulous, poorly-defined class of research called "social priming." There is no such thing as "social priming." There is priming of social categories (elderly, professor) and priming of motivational terms (achievement) and priming of objects (flags, money) and so on. And there are priming effects at the level of cognition (increased activation of concepts) or affect (valence, arousal, or emotions) or behavior (walking, trivial pursuit performance) or physiology, and some of these priming effects will be automatic and some not (and even then recognizing the different varieties of automaticity; Bargh, 1989). These are all different things and need to be treated separately.

Read more...

Apr 2, 2014

by

Brent W. Roberts

This piece was originally posted to the Personality Interest Group and Espresso (PIG-E) web blog at the University of Illinois.

As of late, psychological science has arguably done more to address the ongoing believability crisis than most other areas of science. Many notable efforts have been put forward to improve our methods. From the Open Science Framework (OSF), to changes in journal reporting practices, to new statistics, psychologists are doing more than any other science to rectify practices that allow far too many unbelievable findings to populate our journal pages.

The efforts in psychology to improve the believability of our science can be boiled down to some relatively simple changes. We need to replace/supplement the typical reporting practices and statistical approaches by:

- Providing more information with each paper so others can double-check our work, such as the study materials, hypotheses, data, and syntax (through the OSF or journal reporting practices).

- Designing our studies so they have adequate power or precision to evaluate the theories we are purporting to test (i.e., use larger sample sizes).

- Providing more information about effect sizes in each report, such as what the effect sizes are for each analysis and their respective confidence intervals.

- Valuing direct replication.

It seems pretty simple. Actually, the proposed changes are simple, even mundane.

What has been most surprising is the consistent push back and protests against these seemingly innocuous recommendations. When confronted with these recommendations it seems many psychological researchers balk. Despite calls for transparency, most researchers avoid platforms like the OSF. A striking number of individuals argue against and are quite disdainful of reporting effect sizes. Direct replications are disparaged. In response to the various recommendations outlined above, prototypical protests are:

- Effect sizes are unimportant because we are “testing theory” and effect sizes are only for “applied research.”

- Reporting effect sizes is nonsensical because our research is on constructs and ideas that have no natural metric, so that documenting effect sizes is meaningless.

- Having highly powered studies is cheating because it allows you to lay claim to effects that are so small as to be uninteresting.

- Direct replications are uninteresting and uninformative.

- Conceptual replications are to be preferred because we are testing theories, not confirming techniques.

While these protestations seem reasonable, the passion with which they are provided is disproportionate to the changes being recommended. After all, if you’ve run a t-test, it is little trouble to estimate an effect size too. Furthermore, running a direct replication is hardly a serious burden, especially when the typical study only examines 50 to 60 odd subjects in a 2×2 design. Writing entire treatises arguing against direct replication when direct replication is so easy to do falls into the category of “the lady doth protest too much, methinks.” Maybe it is a reflection of my repressed Freudian predilections, but it is hard not to take a Depth Psychology stance on these protests. If smart people balk at seemingly benign changes, then there must be something psychologically big lurking behind those protests. What might that big thing be? I believe the reason for the passion behind the protests lies in the fact that, though mundane, the changes that are being recommended to improve the believability of psychological science undermine the incentive structure on which the field is built.

I think this confrontation needs to be more closely examined because we need to consider the challenges and consequences of deconstructing our incentive system and status structure. This, then begs the question, what is our incentive system and just what are we proposing to do to it? For this, I believe a good analogy is the dilemma faced by Harry Potter in the last book of the eponymously titled book series.

Read more...

Mar 26, 2014

by

EJ Wagenmakers

In the epic movie "Zombieland", one of the main protagonists –Tallahassee, played by Woody Harrelson– is about to enter a zombie-infested supermarket in search of Twinkies. Armed with a banjo, a baseball bat, and a pair of hedge shears, he tells his companion it is "time to nut up or shut up". In other words, the pursuit of happiness sometimes requires that you expose yourself to grave danger. Tallahasee could have walked away from that supermarket and its zombie occupants, but then he would never have discovered whether or not it contained the Twinkies he so desired.

At its not-so-serious core, Zombieland is about leaving one's comfort zone and facing up to your fears. This I believe is exactly the challenge that confronts the proponents of behavioral priming today. To recap, the phenomenon of behavioral priming refers to unconscious, indirect influences of prior experiences on actual behavior. For instance, presenting people with words associated with old age ("Florida", "grey", etc.) primes the elderly stereotype and supposedly makes people walk more slowly; in the same vein, having people list the attributes of a typical professor ("confused", "nerdy", etc.) primes the concept of intelligence and supposedly makes people answer more Trivia questions correctly.

In recent years, the phenomenon of behavioral priming has been scrutinized with increasing intensity. Crucial to the debate is that many (if not all) of the behavioral priming effects appear to vanish like thin air in the hands of other researchers. Many of these researchers –from now on, the skeptics– have reached the conclusion that behavioral priming effects are elusive, brought about mostly by confirmation bias, the use of questionable research practices, and selective reporting.

Read more...

Mar 19, 2014

by

Daniel Lakens

There is a reason data collection is part of the empirical cycle. If you have a good theory that allows for what Platt (1964) called ‘strong inferences’, then statistical inferences from empirical data can be used to test theoretical predictions. In psychology, as in most sciences, this testing is not done in a Popperian fashion (where we consider a theory falsified if the data does not support our prediction), but we test ideas in Lakatosian lines of research, which can either be progressive or degenerative (e.g., Meehl, 1990). In (meta-scientific) theory, we judge (scientific) theories based on whether they have something going for them.

In scientific practice, this means we need to evaluate research lines. One really flawed way to do this is to use ‘vote-counting’ procedures, where you examine the literature, and say: "Look at all these significant findings! And there are almost no non-significant findings! This theory is the best!” Read Borenstein, Hedges, Higgins, & Rothstein (2006) who explain “Why Vote-Counting Is Wrong” (p. 252 – but read the rest of the book while you’re at it).

Read more...

Mar 12, 2014

by

Åse Innes-Ker

We have lined up a nice set of posts responding to the recent special section in PoPS on social priming and replication/reproducibility, which we will publish in the coming weeks. It has proven easier to find critics of social priming than to find defenders of the phenomenon, and if there are primers out there who want to chime in they are most welcome and may contact us at oscblog@googlegroups.com.

The special section in PoPS was immediately prompted by this wonderful November 2012 issue from PoPS on replicability in psychology (open access!), but the Problems with Priming started prior to this. For those of you who didn’t seat yourself in front of the screen with a tub of well-buttered pop-corn every time behavioral priming made it outside the trade journals, I’ll provide some back-story, and links to posts and articles that frames the current response.

The mitochondrial Eve of behavioral priming is Bargh’s Elderly Prime1. The unsuspecting participants were given scrambled sentences, and were asked to create proper sentences out of four of the five words in each. Some of the sentences included words like Bingo or Flordia – words that may have made you think of the elderly, if you were a student in New York in the mid nineties. Then, they measured the speed with which the participant walked down the corridor to return their work, and, surprising to many, those that unscrambled sentences that included “Bingo” and “Florida” walked slower than those that did not. Conclusion: the construct of “elderly” had been primed, causing participants to adjust their behavior (slower walk) accordingly. You can check out sample sentences in this Marginal Revolution post – yes, priming made it to this high-traffic economy blog.

This paper has been cited 2571 times, so far (according to Google Scholar). It even appears in Kahneman’s Thinking, Fast and Slow, and has been high on the wish-list for replication on Pashler’s PsychFile Drawer. (No longer in the top 20, though).

Finally, in January 2012, Doyen, Klein, Pichon & Cleeremans (a Belgian group) published a replication attempt in PLOSone where they suggest the effect was due to demand. Ed Yong did this nice write-up of the research.

Bargh was not amused, and wrote a scathing rebuttal on his blog in the Psychology Today domain. He took it down after some time (for good reason – I think it can be found, but I won’t look for it.). Ed commented on this too.

A number of good posts from blogging psychological scientists also commented on the story. A sampling are Sanjay Srivastava on his blog Hardest Science, Chris Chambers on NeuroChambers, and Cedar Riener on his Cedarsdigest.

The British Psychological Society published a notice about it in The Psychologist which links to additional commentary. In May, Ed Yong had an article in Nature discussing the status of non-replication in psychology in general, but where he also brings up the Doyen/Bargh controversy. On January 13, the Chronicle published a summary of what had happened.

But, prior to that, Daniel Kahneman made a call for psychologists to clean up their act as far as behavioral priming goes. Ed Yong (again) published two pieces about it. One in Nature and one on his blog.

The controversies surrounding priming continued in the spring of 2013. This time it was David Shanks who, as a hobby (from his video - scroll down below the fold) had taken to attempting to replicate priming of intelligence, work originally done by Dijksterhuis and van Knippenberg in 1998. He had his students perform a series of replications, all of which showed no effect, and was then collected in this PLOSone paper.

Dijksterhuis retorted in the comment section2. Rolf Zwaan blogged about it. Then, Nature posted a breathless article suggesting that this was a fresh blow for us who are Social Psychologists.

Now, most of us who do science thought instead that this was science working just like it ought to be working, and blogged up a storm about it – with some of the posts (including one of mine) linked in Ed Yong’s “Missing links” feature. The links are all in the fourth paragraph, above the scroll, and includes additional links to discussions on replicability, and the damage done by a certain Dutch fraudster.

So here you are, ready for the next set of installments.

1 Ancestral to this is Srull & Wyer’s (1979) story of Donald, who is either hostile or kind, depending on which set of sentences the participant unscrambled in that earlier experiment that had nothing to do with judging Donald.

2 A nice feature. No waiting years for the retorts to be published in the dead tree variant we all get as PDF’s anyway.

Mar 6, 2014

by

Russ Clay

(Thanks to Shauna Gordon-McKeon, Fred Hasselman, Daniël Lakens, Sean Mackinnon, and Sheila Miguez for their contributions and feedback to this post.)

I recently took on the task of calculating a confidence interval around an effect size stemming from a noncentral statistical distribution (the F-distribution to be precise). This was new to me, and as I am of the view that such statistical procedures would add value to the work being done in the social and behavioral sciences, but that they are not common in practice at the present time, potentially due to lack of awareness, I wanted to pass along some of the things that I found.

In an effort to estimate the replicability of psychological science, an important first step is to determine the criteria for declaring a given replication attempt as successful. Lacking clear consensus around this criteria, the OpenScience group determined that rather than settling on a single set of criteria by which the replicability of psychological research would be assessed, multiple methods would be employed, all which provide a measure of valuable insight regarding the reproducibility of published findings in psychology (OpenScience Collaboration, 2012). One such method is to examine the confidence interval around the original target effect and to see if this confidence interval overlaps with the confidence interval from the replication effect. However, estimating the confidence interval around many effects in social science research requires the use of non-central probability distributions, and most mainstream statistical packages (e.g. SAS, SPSS) do not provide off the shelf capabilities for deriving confidence intervals from these distributions (Kelley, 2007).

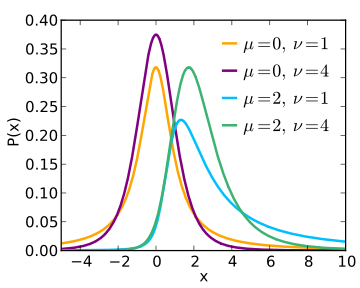

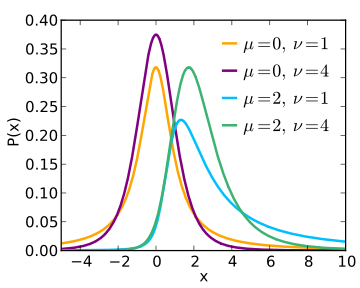

Most of us probably picture common statistical distributions such as the t-distribution, the F-distribution, and the χ2 distribution as being two dimensional, with the x-axis representing the value of the test statistic and the area under the curve representing the likelihood of observing such a value in a sample population. When first learning to conduct these statistical tests, such visual representations likely provided a helpful way to convey the concept that more extreme values of the test statistic were less likely. In the realm of null hypothesis statistical testing (NHST), this provides a tool for visualizing how extreme the test statistic would need to be before we would be willing to reject a null hypothesis. However, it is important to remember that these distributions vary along a third parameter as well: the noncentrality parameter. The distribution that we use to determine the cut-off points for rejecting a null hypothesis is a special, central case of the distribution when the noncentrality parameter is zero. This special-case distribution gives the probabilities of test statistic values when the null hypothesis is true (i.e., when the population effect is zero). As the noncentrality parameter changes (i.e., when we assume that an effect does exist), the shape of the distribution which defines the probabilities of obtaining various values of the parameter in our statistical tests changes as well. The following figure (copied from the Wikipedia page for the noncentral t-distribution) might help provide a sense of how the shape of the t-distribution changes as the noncentrality parameter varies.

Figure by Skbkekas, licensed CC BY 3.0.

The first two plots (orange and purple) illustrate the different shapes of the distribution under the assumption that the true population parameter (the difference in means) is zero. The value of v indicates the degrees of freedom used to determine the probabilities under the curve. The difference between these first two curves stems from the fact that the purple curve has more degrees of freedom (a larger sample), and thus there will be a higher probability of observing values near the mean. These distributions are central (and symmetrical), and as such, values of x that are equally higher or lower than the mean are equally probable. The second two plots (blue and green) illustrate the shapes of the distribution under the assumption that the true population parameter is two. Notice that both of these curves are positively skewed, and that this skewness is particularly pronounced in the blue curve as it is based on fewer degrees of freedom (smaller sample size). The important thing to note is that for these plots, values of x that are equally higher or lower than the mean are NOT equally probable. Observing a value of x = 4 under the assumption that the true value of x is two is considerably more probable than observing a value of x = 0. Because of this, a confidence interval around an effect that is anything other than zero will be asymmetrical and will require a bit of work to calculate.

Because the shape (and thus the degree of symmetry) of many statistical distributions depends on the size of the effect that is present in the population, we need a noncentrality parameter to aid in determining the shape of the distribution and the boundaries of any confidence interval of the population effect. As mentioned previously, these complexities do not arise as often as we might expect in everyday research because when we use these distributions in the context of null-hypothesis statistical testing (NHST), we can assume a special, ‘centralized’ case of the distributions that occurs when the true population effect of interest is zero (the typical null hypothesis). However, confidence intervals can provide different information than what can be obtained through NHST. When testing a null hypothesis, what we glean from our statistics is the probability of obtaining the effect observed in our sample if the true population effect is zero. The p-value represents this probability, and is derived from a probability curve with a noncentrality parameter of zero. As mentioned above, these special cases of statistical distributions such as the t, F, and χ2 are ‘central’ distributions. On the other hand, when we wish to construct a confidence interval of a population effect, we are no longer in the NHST world, and we no longer operate under the assumption of ‘no effect’. In fact, when we build a confidence interval, we are not necessarily making assumptions at all about the existence or non-existence of an effect. Instead, when we build a confidence interval, we want a range of values that is likely to contain the true population effect with some degree of confidence. To be crystal clear, when we construct a 95% confidence interval around a test statistic, what we are saying is that if we repeatedly tested random samples of the same size from the target population under identical conditions, the true population parameter will be bounded by the 95% confidence interval derived from these samples 95% of the time.

From a practical standpoint, a confidence interval can tell us everything that NHST can, and then some. If the 95% confidence interval of a given effect contains the value of zero, then there is a good chance that there is a negligible effect in the relationship you are testing. In this case, as a researcher, the conclusion that you would reach is conceptually similar to declaring that you are not willing to reject a null hypothesis of zero effect on the grounds that there is greater than a 5% chance that the effect is actually zero. However, a confidence interval allows the researcher to say a bit more about the potential size of a population effect as well as the degree of variability that exists in it’s estimate, whereas NHST only permits the researcher to state, with a specified level of confidence, the likelihood that an effect exists at all.

Why, then, is NHST the overwhelming choice of statisticians in the social sciences? The likely answer has to do with the idea of non-centrality stated above. When we build a confidence interval around an effect size, we generally do not build the confidence interval around an effect of zero. Instead, we build the confidence interval around the effect that we find in our sample. As such, we are unable to build the confidence interval using the symmetrical, special case instances of many of our statistical distributions. We have to build it using an asymmetrical distribution that has a shape (a degree of non-centrality) that depends on the effect that we found in our sample. This gets messy, complicated, and requires a lot of computation. As such, the calculation of these confidence intervals was not practical until it became commonplace for researchers to have at their disposal the computational power available in modern computing systems. However, research in the social sciences has been around much longer than your everyday, affordable, quad-core laptop, and because building confidence intervals around effects from non-central distributions was impractical for much of the history of the social sciences, these statistical techniques were not often taught, and their lack of use is likely to be an artifact of institutional history (Steiger & Fouladi, 1997).

All of this to say that in today’s world, researchers generally have more than enough computational power at their disposal to easily and efficiently construct a confidence interval around an effect from a non-central distribution. The barriers to these statistical techniques have been largely removed, and as the value of the information obtained from a confidence interval exceeds the value of the information that can be obtained from NHST, it is useful to spread the word about resources that can help in the computation of confidence intervals around common effect size metrics in the social and behavioral sciences.

One resource that I found to be particularly useful is the MBESS (Methods for the Behavioral, Educational, and Social Sciences) package for the R statistical software platform. For those unfamiliar with R, it is a free, open-source statistical software package which can be run on Unix, Mac, and Windows platforms. The standard R software contains basic statistics functionality, but also provides the capability for contributors to develop their own functionality (typically referred to as ‘packages’) which can be made available to the larger user community for download. MBESS is one such package which provides ninety-seven different functions for statistical procedures that are readily applicable to statistical analysis techniques in the behavioral, educational, and social sciences. Twenty-five of these functions involve the calculation of confidence intervals or confidence limits, mostly for statistics stemming from noncentral distributions.

For example, I used the ci.pvaf (confidence interval of the proportion of variance accounted for) function from the MBESS package to obtain a 95% confidence interval around an η2 effect of 0.11 from a one-way between groups analysis of variance. In order to do this, I only needed to supply the function with several relevant arguments:

F-value: This is the F-value from a fixed-effects ANOVA

df: The numerator and denominator degrees of freedom from the analysis

N: The sample size

Confidence Level: The confidence level coverage that you desire (i.e. 95%)

No more information is required. Based on this, the function can calculate the desired confidence interval around the effect. Here is a copy of the code that I entered and what was produced (with comments in italics to explain what is going on in each step):

library(MBESS);

once you have installed the MBESS package, this command makes it available for your current session of R

ci.pvaf(F.value=4.97, df.1=2, df.2=81, N=84, conf.level=.95)

this uses the ci.pvaf function in the MBESS package to calculate the confidence interval. I have given # the function an F-value (F.value) of 4.97, with 2 degrees of freedom between groups (df.1), and 81 # degrees of freedom within groups (df.2), a sample size (N) of 84, and have asked it to produce a 95% confidence interval (conf.level). Executing the above command produces the following output:

$Lower.Limit.Proportion.of.Variance.Accounted.for

[1] 0.007611619

$Probability.Less.Lower.Limit

[1] 0.025

$Upper.Limit.Proportion.of.Variance.Accounted.for

[1] 0.2320935

$Probability.Greater.Upper.Limit

[1] 0.025

$Actual.Coverage

[1] 0.95

Thus, the 95% confidence interval around my η2 effect is [0.01 - 0.23].

Similar functions are available in the MBESS package for calculating confidence intervals around a contrast in a fixed-effects ANOVA, multiple correlation coefficient, squared multiple correlation coefficient, regression coefficient, reliability coefficient, RMSEA, standardized mean difference, signal-to-noise ratio, and χ2 parameters, among others.

Additional Resources

-

Fred Hasselman has created a brief tutorial for computing effect size confidence intervals using R.

-

For those more familiar with conducting statistics in an SPSS environment, Dr. Karl Wuensch at East Carolina University provides links to several SPSS programs on his Web Page. This program is for calculating confidence intervals for a standardized mean difference (Cohen’s d).

-

In addition, I came across several publications that I found useful in providing background information regarding non-central distributions (a few of which are cited above). I’m sure there are more, but I found these to be a good place to start:

Cumming, G. (2006). How the noncentral t distribution got its hump. Paper presented at the seventh International Conference on Teaching Statistics, Salvador, Bahia, Brazil.

Cumming, G. (2014). The new statistics: Why and how. Psychological Science, 25, 7-29. DOI: 10.1177/0956797613504966

Kelley, K. (2007). Confidence intervals for standardized effect sizes: Theory, application, and implementation. Journal of Statistical Software, 20, 1-24.

Smithson, M. (2001). Correct confidence intervals for various regression effect sizes and parameters: The importance of noncentral distributions in computing intervals. Educational And Psychological Measurement, 61(4), 605-632. doi:10.1177/00131640121971392

Steiger, J. H., & Fouladi, R. T. (1997). Noncentrality interval estimation and the evaluation of statistical models. In L. Harlow, S. > Mulaik, & J. Steiger (Eds.), What if there were no significance tests? (pp. 221-256). Mahwah, NJ: Erlbaum.

Hopefully others find this information as useful as I did!

Feb 27, 2014

by

Ruben Arslan

Pre-registration is starting to outgrow its old home, clinical trials. Because it is a good way to (a) show that your theory can make viable predictions and (b) that your empirical finding is not vulnerable to hypothesising after the results are known (HARKing) and some other questionable research practices, more and more scientists endorse and actually do pre-registration. Many remain wary though and some simply think pre-registration cannot work for their kind of research. A recent amendment (October 2013) to the Declaration of Helsinki mandates public registration of all research on humans before recruiting the first subject and the publication of all results, positive, negative and inconclusive.

For some of science the widespread “fishing for significance” metaphor illustrates the problem well: Like an experimental scientist the fisherman casts out the rod many times, tinkering with a variety of baits and bobbers, one at a time, trying to make a good catch, but possibly developing a superstition about the best bobber. And, like an experimental scientist, if he returns the next day to the same spot, it would be easy to check whether the success of the bobber replicates. If he prefers to tell fishing lore and enshrine his bobber in a display at his home, other fishermen can evaluate his lore by doing as he did in his stories.

Some disciplines (epidemiology, economics, developmental and personality psychology come to mind) proceed, quite legitimately, more like fishing trawlers – that is to say data collection is a laborious, time-consuming, collaborative endeavour. Because these operations are so large and complex, some data bycatch will inevitably end up in the dragnet.

Read more...

Feb 5, 2014

by

Bryan Burnham

Among other things the open science movement encourages “open data” practices, that is, researchers making data freely available on personal/lab websites or institutional repositories for others to use. For some, open data is a necessity as the NIH and NSF have adopted data-sharing policies and require some grant applications to include data management and dissemination plans. According to the NIH:

“...all data should be considered for data sharing. Data should be made as widely and freely available as possible while safeguarding the privacy of participants, and protecting confidential and proprietary data.” (emphasis theirs)

Before making human subject data open several issues must be considered. First, data should be de-identified to maintain subject confidentiality so responses cannot be linked to identities and data are seemingly anonymous. Second, researchers should consider Institutional Review Board’s (IRB) policies about data sharing. (Disclosure: I have been a member of my university's IRB for 6 years and chair of my Departmental Review Board, DRB, for 7 years.)

Unfortunately, while the policies and procedure of all IRBs require researchers to obtain consent, disclose study procedures to subjects, and maintain confidentiality, it is unknown how many IRBs have policies and procedures for open data dissemination. Thus, a conflict may arise between researchers who want to adopt open data practices or need to disseminate data (those with NIH or NSF grants) and judgements of IRBs.

This is an especially important issue for those who want to share data that are already collected: can use data be openly disseminated without IRB review? (I address this below when I offer recommendations.) What can researchers do when they want or need to share data freely, but their IRB does not have a clear policy? And what say does an IRB have in open data practices?

While IRBs should be consulted and informed about open data, as I delineate below IRBs are not now and were never intended to be data-monitoring groups (Bankert & Amdur, 2000). IRBs are regulated and have little say in whether a researcher can share data, based on the purview, scope, and responsibilities of IRBs.

IRBs in the United States are regulated under US Health and Human Services (HHS) guidelines for Protection of Human Subjects. The guidelines describe the composition of IRBs, record keeping, define levels of risk, and list specific duties of IRBs and hint at their limits.

When they function appropriately IRBs review research protocols to (1) evaluate risks; (2) determine whether subject confidentiality is maintained, that is, whether responses are linked to identities (‘confidentiality’ differs from ‘privacy’, which means others will not know a person participated in a study); and (3) evaluate whether subjects are given sufficient information about risks, procedures, privacy, and confidentiality. HHS Regulations Part 46, Subpart A, Section 111 ("Criteria for IRB Approval of Research") (a)(2), is very specific on the purview of IRBs in evaluating protocols:

"In evaluating risks and benefits, the IRB should consider only those risks and benefits that may result from the research (as distinguished from risks and benefits of therapies subjects would receive even if not participating in the research). The IRB should not consider possible long-range effects of applying knowledge gained in the research (for example, the possible effects of the research on public policy) as among those research risks that fall within the purview of its responsibility." [emphasis added]

And regulations §46.111 (a)(6) and (a)(7) state that IRBs are to evaluate the safety, privacy, and confidentiality of subjects in proposed research:

(a)(6) "When appropriate, the research plan makes adequate provision for monitoring the data collected to ensure the safety of subjects.”

(a)(7) “When appropriate, there are adequate provisions to protect the privacy of subjects and to maintain the confidentiality of data."

The regulations make it clear that IRBs should consider only risks directly related to the study, and explicitly forbid IRBs from evaluating potential long-range effects of new knowledge gained from the study, as in new knowledge resulting from data sharing. Thus, IRBs should concern themselves with evaluating a study for safety, confidentiality, and that information is disclosed; reviewing existing data for dissemination is not under the purview of the IRB. The only issue that should concern IRBs about open data is whether the data are de-identified to “...protect the privacy of subjects and to maintain the confidentiality of data." It is not the responsibility of the IRB to monitor data, that responsibility falls to the researcher.

Nonetheless, IRBs may take the position that they are data monitors and deny a researcher’s request to openly disseminate data. In denying a request an IRB may use the argument ‘subjects would not have participated if they knew the data would be openly shared.’ In this case, IRBs would be playing mind-readers; there is no way an IRB can assume subjects would not have participated if they knew data would be openly shared. However, whether a person would decline to participate if they were informed about a researcher’s intent to openly disseminate data is an empirical question.

Also, with this argument the IRB is implicitly suggesting subjects would need to have been informed about open data dissemination in the consent form. But, such a requirement for consent forms neglects other federal guidelines. The Belmont Report provides responsibilities for human researchers, much like the APA's ethical principles, and describes what information should be included in the consent process:

“Most codes of research establish specific items for disclosure intended to assure that subjects are given sufficient information. These items generally include: the research procedure, their purposes, risks and anticipated benefits, alternative procedures (where therapy is involved), and a statement offering the subject the opportunity to ask questions and to withdraw at any time from the research.”

The Belmont Report does not even mention that subjects should be informed about the potential long-range plans or uses of the data they provide. Indeed, researchers do not have to tell subjects what analyses will be used, and for good reason. All the Belmont requires is for subjects be informed about the purpose of the study, the procedures, and be informed about their privacy and confidentiality of responses.

Another argument an IRB could make is the data could be used maliciously. For example, a researcher could make a data set open that included ethnicity and test scores and someone else could use that data to show certain ethnic groups are smarter than others. (This example is based on a recent Open Science Framework post that is the basis for this post.)

Although it is more likely that open data would be used as intended, someone could use data as they were not intended and may find a relationship between ethnicity and test scores. So what? The data are not malicious or problematic, it is the person using (misusing?) the data, and IRBs should not be in the habit of allowing only politically correct research to proceed (Lilienfeld, 2010). Also, by considering what others might do with open data, IRBs would be mind-reading and overstepping its purview by considering “...long-range effects of applying knowledge gained in the research (for example, the possible effects of the research on public policy).”

The bottom line is IRBs cannot know whether subjects would not have participated in a project if they knew the data would be openly disseminated, or potential findings by others. Federal regulations inform IRBs of their specific duties, which do not include data monitoring or making judgments on open data dissemination; those duties are the responsibilities of the researcher.

So what should you do if you want to make your data open? First, don't fear the IRB, but don’t forget the IRB. Perhaps re-examine IRB policies any time you plan a new project to remind yourself of the IRB requirements.

Second, making your data open does depend on what subjects agree to on the consent form, and this is especially important if you want to make existing data open. If subjects are told their participation will remain private (identities not disclosed) and responses will remain confidential (identities not linked to responses), openly disseminating de-identified data would not violate the agreement. However, if subjects were told the data would ‘not be disseminated’, the researcher may violate the agreement if they openly share data. In this case the IRB would need to be involved, subjects may need to re-consent to allow their responses to be disseminated, and new IRB approval may be needed as the original consent agreement may change.

Third, de-identify data sets you plan to make open. This includes removing names, student IDs, the subject numbers, timestamps, and anything else that could be used to uniquely identify a person.

Fourth, inform your IRB and department of your intentions. Describe your de-identification process and that you are engaging in open data practices as you see appropriate while maintaining subject confidentiality and privacy. (If someone objects, direct them toward federal IRB regulations.)

Finally, work with your IRB to develop guidelines and policies for data sharing. Given the speed and recency of the open science and open data movements, it is unlikely many IRBs have considered such policies.

We want greater transparency in science, and open data is one practice the can help. The IRB should not be seen as a hurdle or barrier to disseminating data, but as a reminder that one of the best practices in science is to ensure the integrity of our data and information communications by responsibly maintaining the confidence and privacy of our research subjects.

References

Bankert, E., & Amdur, R. (2000). The IRB is not a data and safety monitoring board. IRB: Ethics and Human Research, 22(6), 9-11.

De Wolfe, V. A., Sieber, J. E., Steel, P. M., & Zarate, A. O. (2005). Part I: What is the requirement for data sharing? IRB: Ethics and Human Research, 27(6), 12-16.

De Wolfe, V. A., Sieber, J. E., Steel, P. M., & Zarate, A. O. (2006). Part III: Meeting the challenge when data sharing is required. IRB: Ethics and Human Research, 28(2), 10-15.

Lilienfeld, S.O. (2010). Can psychology become a science? Personality and Individual Differences, 49, 281-288.

Jan 29, 2014

by

Sean Mackinnon

Nothing is really private anymore. Corporations like Facebook and Google have been collecting our information for some time, and selling it in aggregate to the highest bidder. People have been raising concerns over these invasions of privacy, but generally only technically-savvy, highly motivated people can really be successful at remaining anonymous in this new digital world.

For a variety of incredibly important reasons, we are moving towards open research data as a scientific norm – that is, micro datasets and statistical syntax openly available to anyone who wants it. However, some people are uncomfortable with open research data, because they have concerns about privacy and confidentiality violations. Some of these violations are even making the news: A high profile case about people being identified from their publicly shared genetic information comes to mind.

With open data comes increased responsibility. As researchers, we need to take particular care to balance the advantages of data-sharing with the need to protect research participants from harm. I’m particularly primed for this issue because my own research often intersects with clinical psychology. I ask questions about things like depression, anxiety, eating disorders, substance use and conflict with romantic partners. The data collected in many of my studies has the potential to seriously harm the reputation – and potentially the mental health – of participants if linked to their identity by a malicious person. This said, I believe in the value of open data sharing. In this post, I’m going to discuss a few core issues as it pertains to de-identification – that is, ensuring the anonymity of participants in an openly shared dataset. Violations of privacy will always be a risk: However, some relatively simple steps on the part of the researcher can make re-identification of individual participants much more challenging.

Who are we protecting the data from?

Throughout the process, it’s helpful to imagine yourself as a person trying to get dirt on a potential participant. Of course, this is ignoring the fact that very few people are likely to use data for malicious purposes … but for now, let’s just consider the rare cases where this might happen. It only takes one high-profile incident to be a public relations and ethics nightmare for your research! There are two possibilities for malicious users that I can think of:

-

Identity thieves who don’t know the participant directly, but are looking for enough personal information to duplicate someone’s identity for criminal activities, such as credit card fraud. These users are unlikely to know anything about participants ahead of time, so they have a much more challenging job because they have to be able to identify people exclusively using publicly available information.

-

People who know the participant in real-life and want to find out private information about someone for some unpleasant purpose (e.g., stalkers, jealous romantic partners, a fired employee, etc.). In this case, the party likely knows (a) that the person of interest is in your dataset; (b) basic demographic information on the person such as sex, age, occupation, and the city they live in. Whether or not this user is successful in identifying individuals in an open dataset depends on what exactly the researcher has shared. For fine-grained data, it could be very easy; however, for properly de-identified data, it should be virtually impossible.

Key Identifiers to Consider when De-Identifying Data

The primary way to safeguard privacy in publicly shared data is to avoid identifiers; that is, pieces of information that can be used directly or indirectly to determine a person’s identity. A useful starting point for this is the list of 18 identifiers indicated in the Health Insurance Portability and Accountability Act that are to be used with Protected Health Information. A full list of these identifiers can be found here. Many of these identifiers are obvious (e.g., no names, phone numbers, SIN numbers, etc.), but some identifiers are worth discussing more specifically in the context of psychological research paradigm which shares data openly.

Demographic variables. Most of the variables that psychologists are interested in are not going to be very informative for identifying individuals. For example, reaction time data (even if unique to an individual) is very unlikely to identify participants – and in any event, most people are unlikely to care if other people know that they respond 50ms faster to certain types of visual stimuli. The type of data that are generally problematic are what I’ll call “demographic variables.” So things like sex, ethnicity, age, occupation, university major, etc. These data are sometimes used in analyses, but most often are just used to characterize the sample in the participants section of manuscripts. Most of the time, demographic variables can’t be used in isolation to identify people; instead, combinations of variables are used (e.g., a 27-year old, Mexican woman who works as a nurse may be the only person with that combination of traits in the data, leaving her vulnerable to loss of privacy). Because the combination of several demographic characteristics can potentially produce identifiable profiles, a common rule of thumb I picked up when working with Statistics Canada is to require a minimum of 5 participants per cell. In other words, if a particular combination of demographic features yields less than 5 individuals, the group will be collapsed into a larger, more anonymous, aggregate group. The most common example of this would be using age ranges (e.g., ages 18-25) instead of exact ages; similar logic could apply to most demographic variables. This rule can get restrictive fast (but also demonstrates how little data can be required to identify individual people!) so ideally, share only the demographic information that is theoretically and empirically important to your research area.

Outliers and rare values. Another major issue are outliers and other rare values. Outliers are variably defined depending on the statistical text you read, but generally refer to extreme values when variables are using continuous, interval, or ordinal measurement (e.g., someone has an IQ of 150 in your sample, and the next highest person is 120). Rare values refer to categorical data that very few people endorse (e.g., the only physics professor in a sample). There are lots of different ways you can deal with outliers, and there’s not necessarily a lot of agreement on which is the best – indeed, it’s one of those researcher degrees of freedom you might have heard about. Though this may depend on the sensitivity of the data in question, outliers often have the potential to be a privacy risk. From a privacy standpoint, it may be best for the researcher to deal with outliers by deleting or transforming them before sharing the data. For rare values, you can collapse response options together until there are no more unique values (e.g., perhaps classify the physics professor as a “teaching professional” if there are other teachers in the sample). In the worst case scenario, you may need to report the value as missing data (e.g., a single intersex person in your sample that doesn’t identify as male or female). Whatever you decide, you should disclose to readers what your strategy was for dealing with outliers and rare values in the accompanying documentation so it is clear for everyone using the data.

Dates. Though it might not be immediately obvious, any exact dates in the dataset place participants at risk for re-identification. For example, if someone knew what day the participant took part in a study (e.g., they mention it to a friend; they’re seen in a participant waiting area) then their data would be easily identifiable by this date. To minimize privacy risks, no exact dates should be included in the shared dataset. If dates are necessary for certain analyses, transforming the data into some less identifiable format that is still useful for analyses is preferable (e.g., have variables for “day of week” or “number of days in between measurement occasions” if these are important).

Geographic Locations. The rule of having “no geographic subdivisions smaller than a state” from the HIPAA guidelines is immediately problematic for many studies. Most researchers collect data from their surrounding community. Thus, it will be impossible to blind the geographic location in many circumstances (e.g., if I recruit psychology students for my study, it will be easy for others to infer that I did so from my place of employment at Dalhousie University). So at a minimum, people will know that participants are probably living relatively close to my place of employment. This is going to be unavoidable in many circumstances, but in most cases it should not be enough to identify participants. However, you will need to consider if this geographical information can be combined with other demographic information to potentially identify people, since it will not be possible to suppress this information in many cases. Aside from that, you’ll just have to do your best to avoid more finely grained geographical information. For example, in Canada, a reverse lookup of postal codes can identify some locations with a surprising degree of accuracy, sometimes down to a particular street!

Participant ID numbers. Almost every dataset will (and should) have a unique identification number for each participant. If this is just a randomly selected number, there are no major issues. However, most researchers I know generate ID numbers in non-random ways. For example, in my own research on romantic couples we assign ID numbers chronologically, with a suffix number of “1” indicating men and “2” indicating women. So ID 003-2 would be the third couple that participated, and the male within that couple. In this kind of research, the most likely person to snoop would probably be the other romantic partner. If I were to leave the ID numbers as originally entered, the romantic partner would easily be able to find their own partner’s data (assuming a heterosexual relationship and that participants remember their own ID number). There are many other algorithms researchers might use to create ID numbers, many of which do not provide helpful information to other researchers, but could be used to identify people. Before freely sharing data, you might consider scrambling the unique ID numbers so that they cannot be a privacy risk (you can, of course, keep a record of the original ID numbers in your own files if needed for administrative purposes).

Some Final Thoughts

Risk of re-identification is never zero. Especially when data are shared openly online, there will always be a risk for participants. Making sure participants are fully informed about the risks involved during the consent process is essential. Careless sharing of data could result in a breach of privacy, which could have extremely negative consequences both for the participants and for your own research program. However, with proper safeguards, the risk of re-identification is low, in part due to some naturally occurring features of research. The slow, plodding pace of scientific research inadvertently protects the privacy of participants: Databases are likely to be 1-3 years old by the time they are posted, and people can change considerably within that time, making them harder to identify. Naturally occurring noise (e.g., missing data, imputation, errors by participants) also impedes the ability to identify people, and the variables psychologists are usually most interested in are often not likely candidates to re-identify someone.

As a community of scientists devoted to making science more transparent and open, we also carry the responsibility of protecting the privacy and rights of participants as much as is possible. I don’t think we have all the answers yet, and there’s a lot more to consider when moving forward. Ethical principles are not static; there are no single “right” answers that will be appropriate for all research, and standards will change as technology and social mores change with each generation. Still, by moving forward with an open mind, and a strong ethical conscience to protect the privacy of participants, I believe that data can really be both open and private.